Announcing SAM 3 and SAM 3D: Revolutionizing Image and Video Segmentation with AI

Today marks an exciting milestone in the field of artificial intelligence as Meta introduces SAM 3 and SAM 3D, the latest advancements in the Segment Anything Collection. These cutting-edge AI models are designed to enhance the ability to detect, track, and reconstruct objects both in 2D and 3D formats, paving the way for innovative applications in various domains. Accessible through the Segment Anything Playground platform, these models promise to simplify video editing and offer novel ways to perceive and interact with the visual world.

Advancing Object Detection with SAM 3

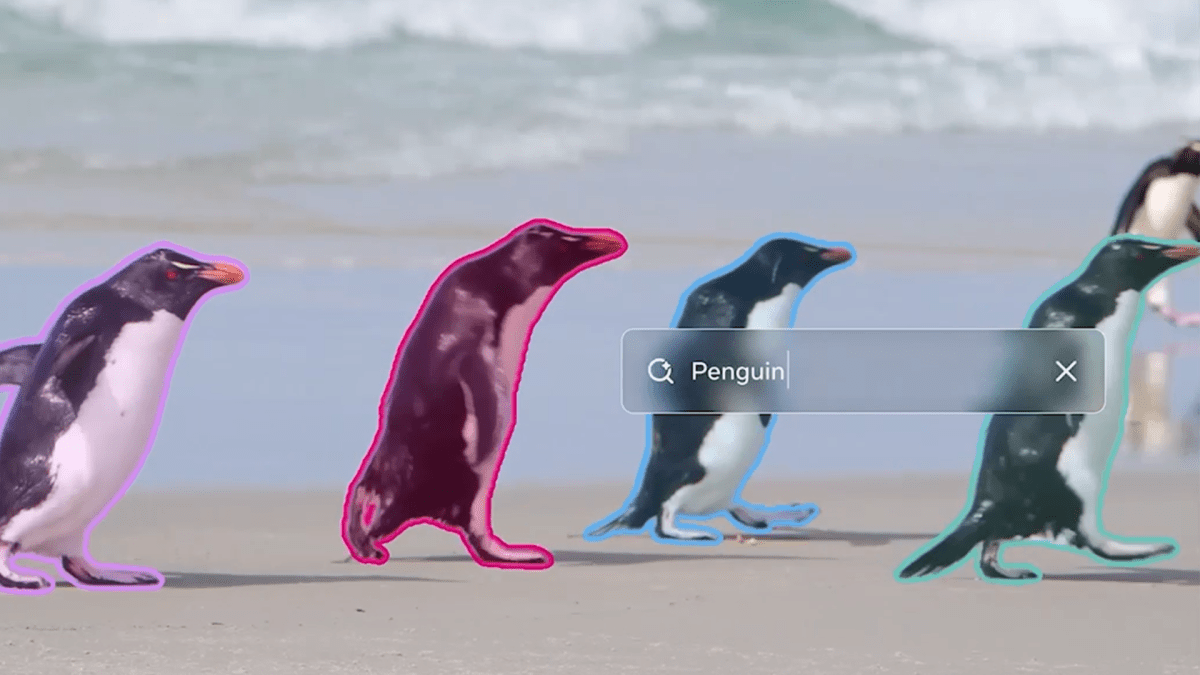

SAM 3 represents a significant leap forward in object detection and tracking within images and videos. Unlike its predecessors, SAM 1 and SAM 2, which relied solely on visual prompts, SAM 3 introduces the ability to use detailed text prompts. This advancement allows users to specify objects with greater precision, overcoming the traditional limitations of AI models that struggle to relate language to specific visual elements.

Historically, AI models have operated with a fixed set of text labels, capable of identifying basic concepts such as "car" or "bus." However, they often falter when tasked with recognizing more nuanced concepts, like "yellow school bus." SAM 3 addresses this issue by accepting a broader range of text inputs. For instance, when prompted with "red baseball cap," SAM 3 can accurately segment all matching objects within an image or video. Moreover, when integrated with multimodal large language models, SAM 3 can interpret and respond to more complex text prompts, such as "people sitting down, but not wearing a red baseball cap."

The introduction of SAM 3 is set to revolutionize creative media tools, enabling more sophisticated editing and transformation of videos and images. In the near future, video creation apps like Edits will harness SAM 3 to apply effects to specific people or objects within videos, enriching the user experience. These advancements will soon be integrated into Vibes on the Meta AI app and available on meta.ai.

Bringing the Physical World to Life with SAM 3D

SAM 3D introduces two open-source models that facilitate the reconstruction of 3D objects from a single image. This breakthrough sets a new benchmark for AI-driven 3D reconstruction, encompassing both objects and human bodies. SAM 3D Objects focuses on object and scene reconstruction, while SAM 3D Body specializes in estimating human shapes and forms. These models deliver exceptional performance, significantly surpassing existing methods in the field.

In collaboration with artists, Meta has developed SAM 3D Artist Objects, a pioneering evaluation dataset that includes a diverse range of images and objects. This dataset offers a more rigorous approach to measuring research progress in 3D modeling, setting a new standard for the industry.

The release of SAM 3D represents a pivotal step in utilizing large-scale data to tackle the complexities of the physical world. Its potential applications span a wide array of fields, from robotics and science to sports medicine. Creators and researchers alike can explore new frontiers in augmented and virtual reality, generate assets for gaming, or simply delve into the exciting possibilities of AI-enabled 3D modeling.

A practical application of SAM 3D is already being implemented in Facebook Marketplace. The new "View in Room" feature allows users to visualize the style and fit of home decor items, such as lamps or tables, in their spaces before making a purchase decision.

Exploring Cutting-Edge Models on Segment Anything Playground

The Segment Anything Playground platform provides an accessible entry point for users to experiment with SAM 3 and SAM 3D. No technical expertise is required to get started. Users can upload images or videos and use short text phrases to prompt SAM 3 to cut out matching objects. Alternatively, SAM 3D enables users to view scenes from new perspectives, virtually rearrange elements, or add captivating 3D effects. The platform also offers a variety of templates, ranging from practical options like pixelating faces and license plates to creative video edits featuring spotlight effects and motion trails.

As part of this launch, Meta is releasing SAM 3 model weights, a new evaluation benchmark dataset for open vocabulary segmentation, and a comprehensive research paper detailing the development of SAM 3. Additionally, Meta is partnering with the Roboflow annotation platform, allowing users to annotate data and fine-tune SAM 3 to meet specific needs.

For SAM 3D, Meta is sharing model checkpoints and inference code, along with introducing a novel benchmark for 3D reconstruction. This dataset features a rich array of images and objects, offering a level of realism and challenge that exceeds existing 3D benchmarks. It represents a new standard for assessing research progress in 3D modeling, pushing the field toward a deeper understanding of the physical world.

Meta is eager to share these innovative models with the public, empowering individuals to explore their creativity, build new experiences, and push the boundaries of what’s possible with AI. The company is excited to see the diverse range of creations that users will generate with SAM 3 and SAM 3D.

For further details on SAM 3 and SAM 3D, readers are encouraged to visit the AI at Meta blog, where they can delve deeper into the capabilities and potential applications of these groundbreaking models.

In conclusion, the introduction of SAM 3 and SAM 3D marks a transformative moment in the field of AI-driven image and video segmentation. These models promise to reshape the way we interact with visual content, offering new tools for creativity and innovation across various domains. Whether you’re a seasoned researcher or a curious creator, SAM 3 and SAM 3D provide exciting opportunities to explore the intersection of AI and the visual world.

For more Information, Refer to this article.