NVIDIA’s Graph Processing Milestone: A New Era in High-Performance Computing

In a groundbreaking achievement that has set a new benchmark in the world of computing, NVIDIA has claimed the top spot on the prestigious Graph500 list. This recognition is not just about speed but also about efficiency and innovation in handling complex data structures at an unprecedented scale.

Record-Breaking Performance

NVIDIA recently announced that it achieved a remarkable feat by processing 410 trillion traversed edges per second (TEPS) using a commercially available cluster. This result secured the number one position on the 31st edition of the Graph500 breadth-first search (BFS) list. The benchmark was performed on an advanced computing cluster located at a CoreWeave data center in Dallas. The setup employed 8,192 NVIDIA H100 GPUs to manage a graph consisting of 2.2 trillion vertices and 35 trillion edges, showcasing more than double the performance of similar solutions in its category, including those hosted in national laboratories.

To put this achievement into perspective, imagine if every person on Earth had 150 friends, forming a graph of 1.2 trillion edges representing social relationships. NVIDIA and CoreWeave’s solution could search through all these connections on the planet in just about three milliseconds. This astounding speed is only part of the story; the real breakthrough lies in the efficiency of the system. Unlike a comparable entry in the top 10 runs of the Graph500 list that utilized about 9,000 nodes, NVIDIA’s setup required just over 1,000 nodes, delivering three times better performance per dollar.

The success of this endeavor was made possible by leveraging the comprehensive power of NVIDIA’s compute, networking, and software technologies. This included the utilization of the NVIDIA CUDA platform, Spectrum-X networking, H100 GPUs, and a new active messaging library. The combination of these technologies allowed NVIDIA to push the boundaries of performance while minimizing the hardware footprint.

Understanding Graphs at Scale

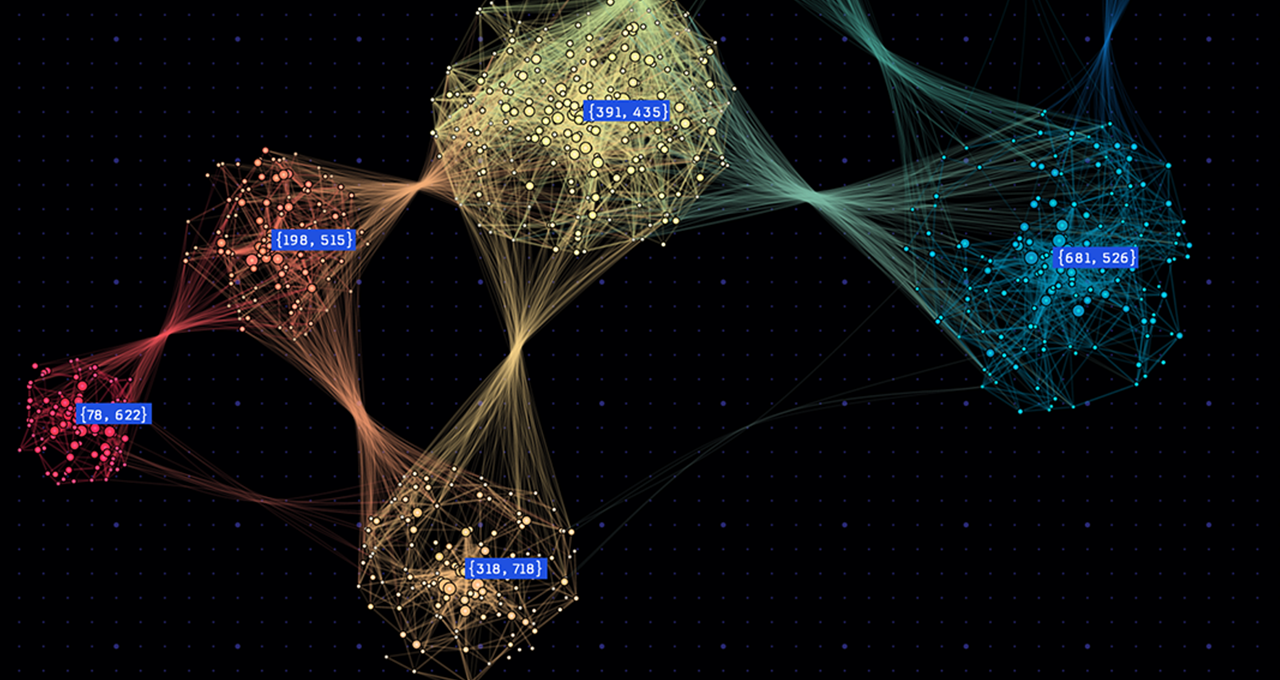

Graphs are fundamental to modern technology, representing the relationships between pieces of information in vast networks. These structures are prevalent in social networks and banking applications, among other use cases. For instance, on LinkedIn, a user’s profile is considered a vertex. Connections or relationships to other users form edges, with other users represented as vertices. While some users may have as few as five connections, others might have as many as 50,000. This variability creates a sparse and irregular graph structure that is unpredictable, unlike structured and dense models such as images or language models.

The Graph500 BFS benchmark has long been an industry standard because it measures a system’s ability to navigate this irregularity at scale. The BFS test evaluates the speed of traversing each vertex and edge in a graph. A high TEPS score indicates superior interconnects, such as cables or switches between compute nodes, as well as enhanced memory bandwidth and software capable of exploiting the system’s capabilities. This benchmark validates the engineering of the entire system, not just the speed of its CPU or GPU, effectively measuring how quickly a system can "think" and associate disparate pieces of information.

Current Techniques for Graph Processing

Traditionally, GPUs have been known for accelerating dense workloads like AI training. However, the largest sparse linear algebra and graph workloads have traditionally relied on CPU architectures. In graph processing, CPUs move graph data across compute nodes, but as the graph scales to trillions of edges, this constant movement can create bottlenecks and slow down communication.

Developers have employed various software techniques to mitigate this issue. A common strategy involves processing the graph where it is located using active messages, which are small, grouped messages designed to maximize network efficiency. While this software technique significantly accelerates processing, active messaging is typically designed to operate on CPUs and is limited by the throughput rate and computational capabilities of CPU systems.

Reengineering Graph Processing for the GPU

To enhance the BFS run’s speed, NVIDIA devised a full-stack, GPU-only solution that reimagines how data moves across the network. A custom software framework was developed using InfiniBand GPUDirect Async (IBGDA) and the NVSHMEM parallel programming interface, enabling GPU-to-GPU active messages. With IBGDA, the GPU can directly communicate with the InfiniBand network interface card, allowing for efficient message aggregation.

This system has been engineered from the ground up to support hundreds of thousands of GPU threads sending active messages simultaneously, compared to just hundreds of threads on a CPU. As a result, active messaging runs entirely on GPUs, bypassing the CPU and taking full advantage of the massive parallelism and memory bandwidth of NVIDIA H100 GPUs. This innovation enables the sending, moving, and processing of messages across the network on the receiving end.

Running on the stable, high-performance infrastructure of NVIDIA partner CoreWeave, this orchestration doubled the performance of comparable runs while using a fraction of the hardware and at a significantly reduced cost.

Accelerating New Workloads

This breakthrough has far-reaching implications for high-performance computing (HPC). Fields like fluid dynamics and weather forecasting, which rely on similar sparse data structures and communication patterns, are poised to benefit greatly from this advancement. For decades, these fields have been constrained by CPUs at the largest scales, even as data scales from billions to trillions of edges. NVIDIA’s success on the Graph500 list, along with two other top 10 entries, validates a new approach to HPC at scale.

With NVIDIA’s full-stack orchestration of computing, networking, and software, developers now have access to technologies like NVSHMEM and IBGDA to efficiently scale their largest HPC applications. This enables supercomputing performance on commercially available infrastructure, democratizing access to acceleration for massive workloads.

For more information on the latest Graph500 benchmarks and NVIDIA networking technologies, you can visit the Graph500 website or NVIDIA’s official networking page.

For more Information, Refer to this article.