The Rise of Mixture-of-Experts Models in Artificial Intelligence

The current landscape of artificial intelligence is undergoing a transformative shift, with Mixture-of-Experts (MoE) models emerging as the architecture of choice for leading AI systems. These models, inspired by the human brain’s efficiency, are setting new benchmarks in AI performance and capability.

What Makes MoE Models So Effective?

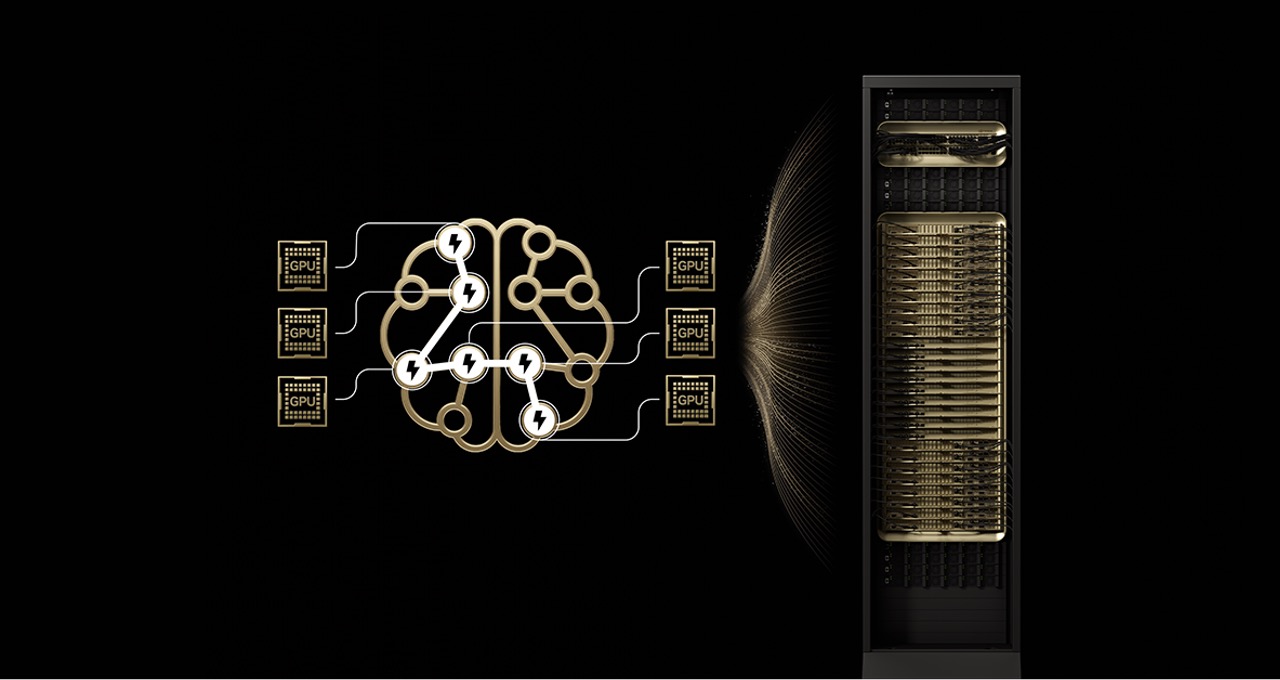

In the realm of AI, building bigger models with dense architectures was once the gold standard. These models, which use all their parameters for every token generation, demand immense computational power and energy. However, MoE models offer a more efficient alternative. Similar to how the human brain engages specific regions for different tasks, MoE models activate only the necessary "experts" for a given token, optimizing both performance and energy use.

The MoE architecture is characterized by its ability to selectively engage specialized “experts” within the model. For each task or token, only the most relevant experts are activated, which means that while the model may contain hundreds of billions of parameters, only a fraction is utilized at any given time. This selective activation not only boosts performance but significantly reduces computational costs.

Industry Adoption and Benchmark Achievements

The AI industry has quickly recognized the value of MoE models, with over 60% of this year’s open-source AI releases adopting this architecture. On the Artificial Analysis leaderboard, the top 10 intelligent open-source models, including DeepSeek AI’s DeepSeek-R1 and Moonshot AI’s Kimi K2 Thinking, all utilize MoE frameworks.

A critical factor in the success of these models is the hardware infrastructure. The NVIDIA GB200 NVL72 system, which combines cutting-edge hardware and software optimizations, is enabling these models to achieve unprecedented performance levels. For instance, the Kimi K2 Thinking MoE model experiences a 10x performance increase on the GB200 NVL72 compared to previous systems. This performance leap is not just about speed; it translates into more efficient and cost-effective token generation.

Breaking Down the MoE Model Architecture

MoE models are constructed with multiple specialized components or “experts,” each capable of handling specific tasks. The model’s router determines which experts to activate for any given input, allowing for targeted and efficient processing. This architecture mirrors cognitive functionality, where task-specific brain regions are engaged based on need, thus optimizing resource usage.

The NVIDIA GB200 NVL72 system plays a pivotal role in the scalability of MoE models. This rack-scale system, featuring 72 NVIDIA Blackwell GPUs, acts as a single cohesive unit, providing 1.4 exaflops of AI performance and 30TB of fast shared memory. This setup allows for extensive expert parallelism, where experts are spread across a large number of GPUs, reducing memory pressure and accelerating communication between experts.

Overcoming Challenges in Scaling MoE Models

Despite their advantages, scaling MoE models for production is not without challenges. Memory limitations and latency issues can arise when experts are distributed across multiple GPUs. However, the extreme codesign of the NVIDIA GB200 NVL72 system addresses these bottlenecks. By distributing experts across up to 72 GPUs, the system reduces the number of experts per GPU, thereby minimizing parameter-loading pressure and enhancing memory efficiency.

Moreover, the NVLink Switch interconnect fabric facilitates instantaneous communication between GPUs, enabling rapid information exchange necessary for MoE model efficacy. Other optimizations, such as the NVIDIA Dynamo framework and NVFP4 format, further enhance the accuracy and performance of these models.

Practical Applications and Industry Impact

The practical applications of MoE models are vast, impacting various sectors that rely on AI. Major cloud service providers, including Amazon Web Services and Google Cloud, are deploying the GB200 NVL72 system to support these models. Enterprises like DeepL are leveraging this technology to advance their AI models, pushing the boundaries of what’s possible in terms of efficiency and performance.

The economic benefits are also significant. The enhanced performance per watt translates into a 10x increase in token generation efficiency, which is crucial for data centers constrained by power and cost limitations. This efficiency not only boosts AI capabilities but also transforms the economic landscape by reducing operational costs and increasing the return on investment.

Future Prospects and the Road Ahead

The future of AI is poised to be shaped by the continued evolution of MoE models. As AI systems become more multimodal, with specialized components for language, vision, and other modalities, the MoE architecture will likely be adapted to accommodate these developments. This could lead to even greater efficiencies, with shared pools of experts serving multiple applications and users.

In conclusion, Mixture-of-Experts models are redefining the AI landscape, offering a scalable, efficient, and powerful architecture for future developments. The NVIDIA GB200 NVL72 system is at the forefront of this transformation, unlocking the full potential of MoE models and setting the stage for the next generation of intelligent systems. As we move forward, the principles of MoE will continue to guide the development of AI, ensuring that massive capability and efficiency go hand in hand.

For more technical insights into how MoE models are scaled using NVIDIA technology, you can explore the detailed breakdown provided by Nvidia’s official resources. These advancements are part of an ongoing series focused on enhancing AI inference performance and maximizing return on investment through cutting-edge technological solutions.

For more Information, Refer to this article.