Embracing the Rise of Autonomous AI Systems: A New Era in Enterprise Software Development

In the ever-evolving landscape of technology, the emergence of agentic AI systems marks a significant departure from traditional software development and deployment methods. These new systems operate with a level of autonomy that was previously unimaginable, setting their own goals and executing tasks across various platforms and interfaces without the need for continuous human oversight. While this advancement brings about unparalleled opportunities for efficiency and innovation, it also presents a host of unprecedented security challenges that traditional identity and access management frameworks are ill-equipped to handle.

The Surge of Non-Human Identities

Enterprise security models have historically been designed around the premise that identities represented human users, who could be held accountable for their actions. However, this assumption has become increasingly outdated with the advent of cloud computing, where non-human identities (NHIs)—representing machines, applications, and services—have surged. The rise of autonomous AI agents has further exacerbated this trend, leading to an explosion of NHIs that operate with minimal human oversight, often executing thousands of actions daily. This shift poses unique security challenges, as these NHIs frequently rely on static credentials, possess excessive privileges, and lack proper mechanisms for accountability.

Statistics highlight the magnitude of the issue: NHIs now outnumber human users by a ratio of 50:1, and a staggering 97% of these identities have excessive privileges. NHI exploitation has thus emerged as the primary cybersecurity threat in today’s digital landscape. The implications of these vulnerabilities are already being felt across industries, with incidents of prompt injection and overly permissive access leading to significant data breaches and intellectual property leaks.

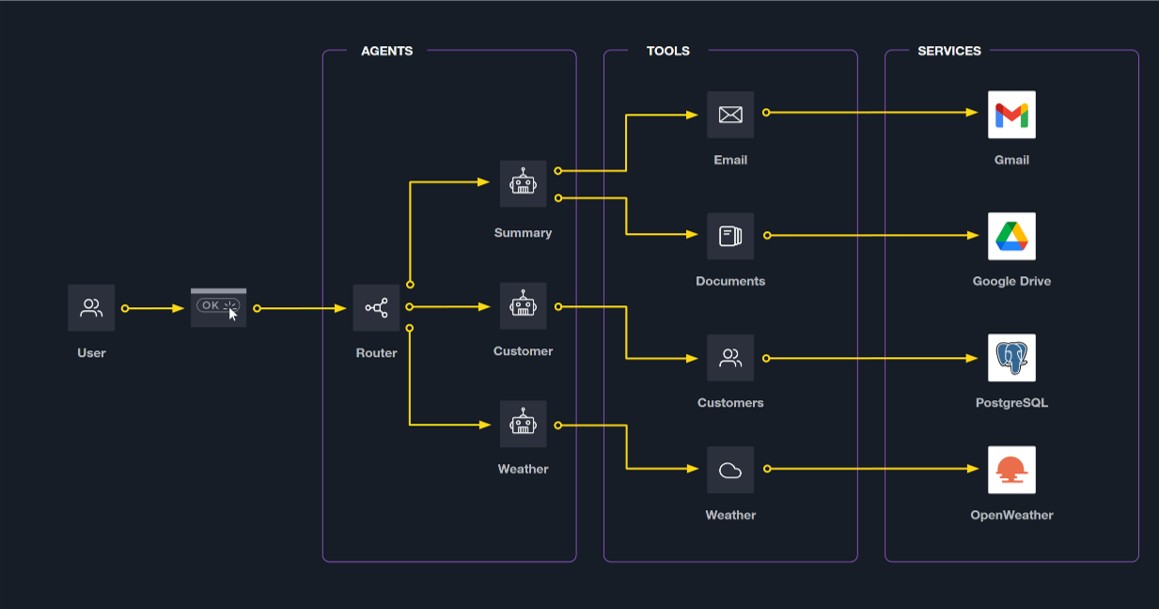

Understanding the Architecture of Agentic Systems

To grasp the security challenges posed by agentic systems, it is essential to understand their fundamental differences from traditional AI models. Traditional AI functions as sophisticated pattern matchers, processing inputs to produce outputs. In contrast, agentic systems act as autonomous entities within an organization’s infrastructure, making independent decisions without constant human intervention.

Agentic AI systems are distinguished by three critical attributes:

- Autonomy: These systems make independent decisions and act without supervision, necessitating the integration of security controls directly into the system.

- Adaptive Learning: Agentic systems continuously learn and adapt their behavior based on new information and feedback, potentially leading to unexpected shifts in behavior patterns.

- Expanded Attack Surface: These systems interact with numerous external platforms and services, creating multiple potential entry points for cyber attackers.

Exploring Agentic Vulnerabilities

While some vulnerabilities unique to agentic systems are novel, many represent familiar security challenges that manifest in new and dangerous ways when autonomous agents are involved. Below are some common categories of agentic exploits:

- Identity and Authentication Failures: The "confused deputy" problem arises when a service with high privileges acts on behalf of another user without enforcing identity boundaries.

- Credential and Secret Management: Long-lived credentials, hard-coded secrets, and lack of rotation create persistent vulnerabilities.

- Tool and Integration Exploits: Tool poisoning attacks can lead to unauthorized actions and data exfiltration, with APIs lacking robust authentication being particularly vulnerable.

- Supply Chain Attacks: Malicious actors can exploit server registries and update mechanisms to inject harmful code.

- Multi-Agent System Threats: Attackers can tamper with agent communications, spreading false information throughout the system.

- Prompt-Based Attacks: Carefully crafted commands can override agent instructions, leading to unauthorized actions.

- Data Security Threats: Malicious data can be injected into knowledge databases that systems rely on, compromising accuracy.

- Runtime and Operational Threats: Agents can be tricked into using tools with incorrect credentials or elevated privileges.

- Detection and Guardrail Evasion: Different input types can be exploited to embed malicious instructions in undetectable formats.

- Compliance and Governance Gaps: Insufficient audit trails can hinder compliance and traceability of agent actions.

The Magnitude of the Threat

The impact of agentic exploits is already being felt, with prompt-based attacks accounting for 35.3% of documented AI incidents. Basic prompt injections have resulted in significant losses, with unauthorized transactions, fake agreements, and brand-damaging behavior being some of the outcomes. The discovery of the first zero-click AI vulnerability further underscores the seriousness of the threat.

Challenges in the Development Lifecycle

Security vulnerabilities are not limited to production environments; they can be introduced at any stage of the agentic development lifecycle. The emerging trend of "vibecoding," where developers rely heavily on AI-generated code, can lead to two significant problems:

- Experienced developers may become complacent, skipping code reviews that would catch security issues.

- Junior developers may gain capabilities beyond their skill level, building complex integrations without the foundational knowledge to identify security problems.

A case study of a supply chain attack illustrates how malicious actors can exploit AI-assisted development, emphasizing the need for vigilance at every stage of the software development process.

Embracing Zero Trust Principles

The traditional perimeter-based defense models are inadequate in the face of agentic architectures. Zero trust principles offer a more robust foundation for securing these systems:

- Identity-Based Access: Every agent and tool must have a verifiable identity, with actions executed on behalf of validated users with scoped permissions.

- Dynamic Authorization: Real-time evaluation of permissions ensures that actions are backed by verifiable identity.

- Short-Lived Credentials: Just-in-time credential generation minimizes the risk when credentials are leaked.

- Comprehensive Audit Trails: Every action should be traceable to a human authorizer for compliance and forensics.

- Encrypted Communications: All communications must use TLS to prevent credential capture through packet sniffing.

Implementing Zero Trust with HashiCorp Vault

Translating zero trust principles into reality requires tools that enforce identity-based access control, manage secrets at scale, automate credential lifecycles, and provide comprehensive audit capabilities. HashiCorp Vault has emerged as a foundational platform for implementing these capabilities, offering a policy-driven approach to identity and secrets management that scales across environments. Vault’s functionalities directly address the security challenges of agentic systems:

- Secret Discovery and Remediation: Vault scans for unmanaged secrets and provides alerts and guided remediation.

- Dynamic Secret Generation: Vault enables just-in-time secret generation, reducing the window of exploitability.

- Automated Certificate Management: Ensures consistent encryption through automated certificate issuance and renewal.

- Identity-Based Access Control: Provides a consistent identity layer across clouds and identity providers.

- Comprehensive Audit Logging: Logs every operation with full context for compliance and forensics.

Achieving Measurable Security Outcomes

Implementing zero trust principles for agentic systems leads to concrete improvements:

- Reduced Attack Surface: Eliminating static credentials and excessive access removes vulnerability classes.

- Improved Secret Hygiene: Centralized management ensures proper secret handling.

- Scalable Identity Management: Unified identity with dynamic credentials simplifies forensics.

- Resilient PKI Architecture: Automated certificate management ensures consistent encryption.

- Future-Proof Security: Zero trust architectures adapt to emerging AI risks without major rewrites.

A Pragmatic Path Forward

Security teams must acknowledge the reality that agentic AI systems are being deployed faster than security solutions are developed. A pragmatic approach involves applying current best practices with proven tools while planning for future vulnerabilities. Organizations should treat every agent as untrusted, enforce identity-based access, generate credentials dynamically, encrypt all communications, and maintain comprehensive audit trails.

By implementing zero trust architectures now, organizations can securely scale AI capabilities, positioning themselves to withstand increasingly sophisticated attacks on vulnerable systems. The time to act is now—before agentic systems become critical infrastructure without adequate security controls.

For further guidance on implementing zero trust for agentic systems, explore HashiCorp Vault’s identity-based security capabilities and HCP Vault Radar for secret discovery and remediation.

For more information, visit HashiCorp Vault.

For more Information, Refer to this article.