In an exciting development today, Amazon Bedrock has unveiled new service tiers designed to give businesses more flexibility in managing their AI workloads, allowing them to optimize costs without compromising on performance. This introduction is aimed at helping organizations address the challenge of balancing cost efficiency with the performance requirements of their diverse AI applications.

Overview of Amazon Bedrock’s New Service Tiers

Amazon Bedrock’s new offering introduces three distinct service tiers: Priority, Standard, and Flex. Each tier is tailored to meet specific workload requirements, addressing the varying needs of applications in terms of response times and cost considerations.

- Priority Tier: This tier is designed for mission-critical applications that require the quickest response times. It provides priority compute resources, ensuring that requests are processed ahead of others. This is particularly beneficial for applications like real-time language translation services and customer-facing chat-based assistants, where rapid response is crucial. However, this tier comes at a higher cost due to the premium service it provides.

- Standard Tier: Ideal for everyday AI tasks, the Standard tier offers consistent performance at regular rates. It is best suited for applications such as content generation, text analysis, and routine document processing. This tier balances performance and cost efficiently, making it perfect for business operations that require reliable yet cost-effective AI solutions.

- Flex Tier: For workloads that can tolerate longer processing times, the Flex tier offers a cost-effective solution. This tier is perfect for applications like model evaluations, content summarization, and multistep analytical workflows. By opting for this tier, businesses can significantly reduce costs while still achieving satisfactory performance levels.

Choosing the Right Tier for Your AI Workloads

Selecting the appropriate service tier for your AI workloads is crucial for optimizing both performance and costs. Here are some guidelines to help you make informed decisions:

- Mission-Critical Applications: For applications that are critical to business operations and require immediate responses, the Priority tier is recommended. This includes applications like customer service chat assistants and real-time language translation, where lower latency is essential.

- Business-Standard Applications: For important workloads that need responsive performance but are not as time-sensitive as mission-critical applications, the Standard tier is suitable. Use this tier for tasks like content generation and routine document processing.

- Business-Noncritical Applications: For less urgent workloads that can afford longer processing times, the Flex tier is the most cost-efficient option. This tier is ideal for model evaluations and content summarization tasks.

To implement these tiers effectively, start by reviewing your current application usage patterns. Identify which workloads require immediate processing and which can be handled more gradually. You can then test different tiers by routing a small portion of your traffic through them to evaluate performance and cost benefits.

Tools for Cost and Performance Management

Amazon offers several tools to help businesses estimate and manage their costs and performance across different service tiers. The AWS Pricing Calculator is a useful tool for estimating costs based on your expected workload for each tier. By inputting your specific usage patterns, you can get a clearer picture of your budget requirements.

To monitor usage and costs, you can utilize the AWS Service Quotas console or enable model invocation logging in Amazon Bedrock. Observing these metrics with Amazon CloudWatch provides visibility into your token usage and helps track performance across different tiers.

Implementing the New Service Tiers

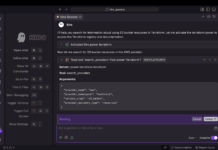

Businesses can start using these new service tiers immediately. The selection of a service tier can be made on a per-API call basis, providing flexibility in how you manage your AI workloads. Here’s a simple example using the ChatCompletions OpenAI API:

python<br /> from openai import OpenAI<br /> <br /> client = OpenAI(<br /> base_url="https://bedrock-runtime.us-west-2.amazonaws.com/openai/v1",<br /> api_key="$AWS_BEARER_TOKEN_BEDROCK" # Replace with actual API key<br /> )<br /> <br /> completion = client.chat.completions.create(<br /> model="openai.gpt-oss-20b-1:0",<br /> messages=[<br /> {"role": "developer", "content": "You are a helpful assistant."},<br /> {"role": "user", "content": "Hello!"}<br /> ],<br /> service_tier="priority" # options: "priority | default | flex"<br /> )<br /> <br /> print(completion.choices[0].message)<br />Additional Resources and Support

To learn more about these service tiers and how to implement them effectively, you can refer to the Amazon Bedrock User Guide. For personalized assistance with planning and implementation, contacting your AWS account team is recommended.

Businesses are encouraged to share their experiences with these new pricing options and how they have optimized their AI workloads. Feedback can be shared online through social networks or during AWS events.

For more information and updates, visit Amazon Bedrock and explore the wide array of resources available to support your AI workload management.

For more Information, Refer to this article.